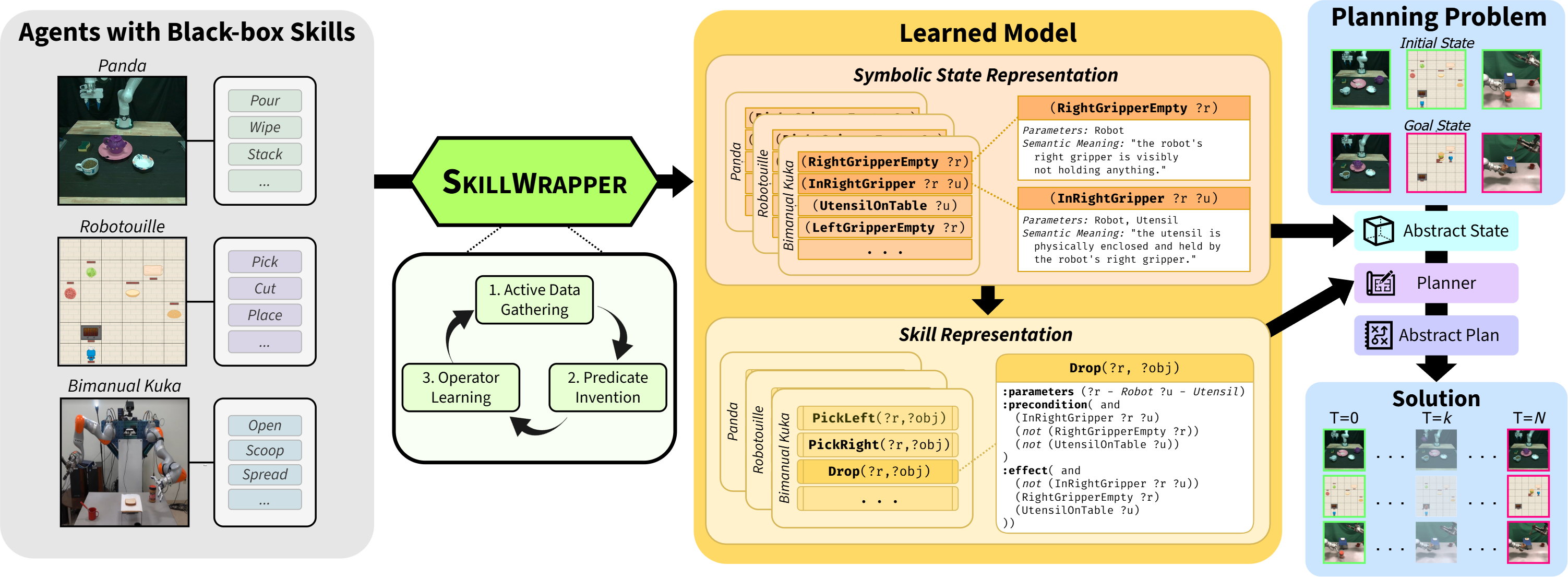

SkillWrapper: Generative Predicate Invention for Skill Abstraction

TL;DR – This paper introduces SkillWrapper, a system for creating abstract representations of skills using foundation models.

TL;DR – This paper introduces SkillWrapper, a system for creating abstract representations of skills using foundation models.

TL;DR – This paper formalizes the concept of object-level planning and discusses how this level of planning naturally integrates with large language models (LLMs).

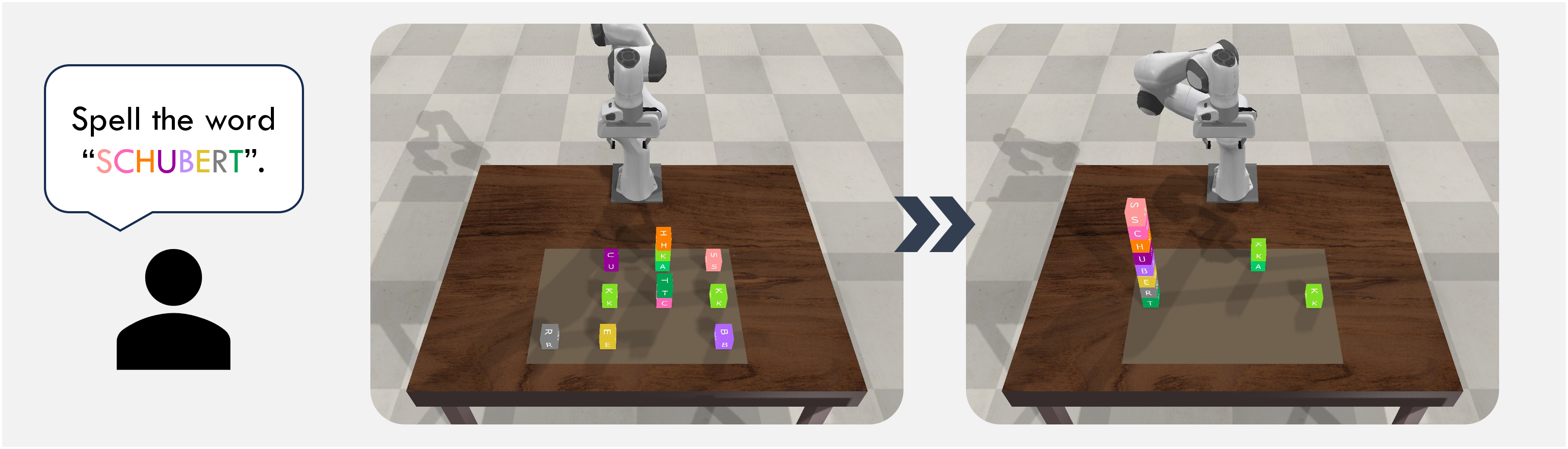

TL;DR – In this paper, we introduce CAPE: an approach to correct errors encountered during robot plan execution. We exploit the ability of large language models to generate high-level plans and to reason about causes of errors.

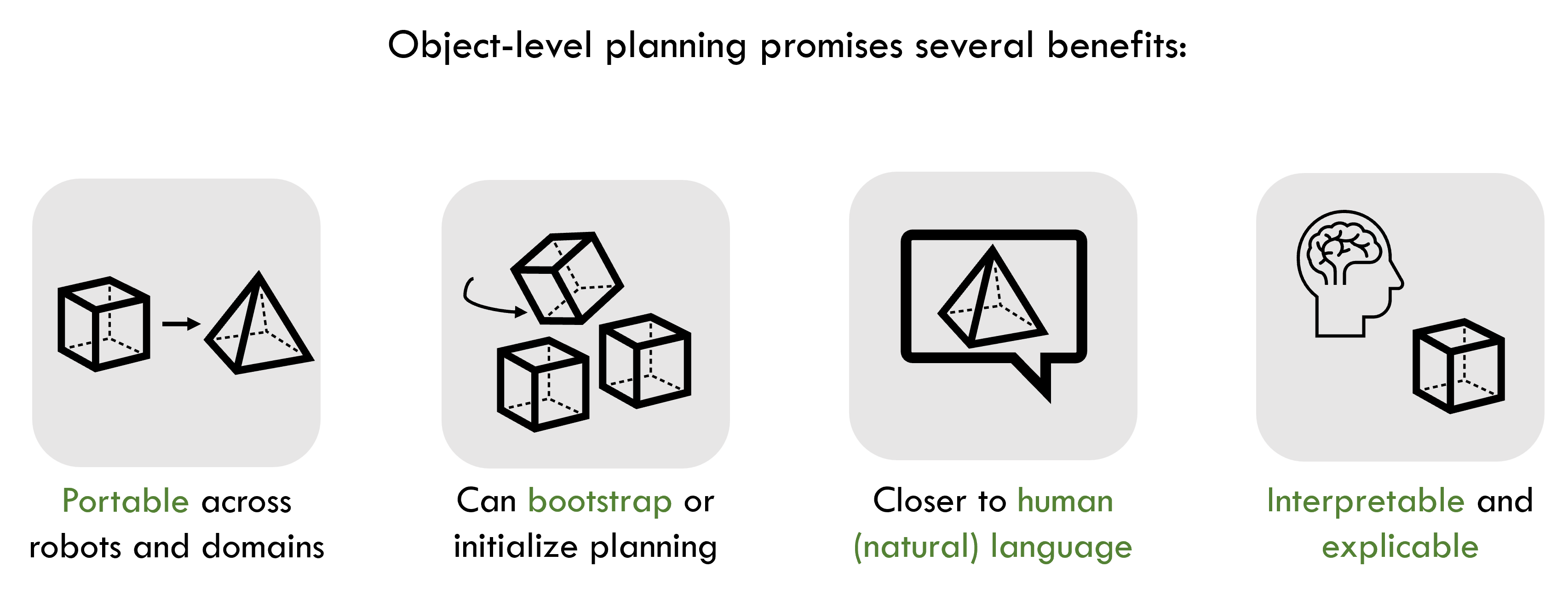

TL;DR – This workshop paper (specifically, a blue-sky submission) introduces the importance of object-level planning and representation as an additional layer on top of task and motion planning. I present several benefits of using object-level planning for long-term use in robotics.

TL;DR – This was a collaboration with Clemson University’s Yunyi Jia and Yi Chen, who were interested in using FOONs for representing assembly tasks. They successfully utilized and adapted a FOON to robotic assembly execution.

TL;DR – In this paper, we introduce the idea of connecting FOONs to robotic task and motion planning. We automatically transform a FOON graph, which exists at the object level (i.e., it is a representation that uses meaningful labels or expressions close to human language), into task planning specifications written in PDDL (not a very intuitive way to communicate about tasks).

TL;DR – In this paper, we attempt to execute task plan sequences extracted from FOONs. Since these sequences may contain actions that are not executable by a robot, we introduce a human assistant in planning, and the robot and assistant work together to solve the task.

TL;DR – This was my first survey paper that covers knowledge representations for service robotics. Although it is dated, it covers an extensive list of approaches used to represent knowledge for several robot sub-tasks.

TL;DR – In this paper, we explore methods in natural language processing (NLP) – specifically semantic similarity – for expanding or generalizing knowledge contained in a FOON. This alleviates the need for demonstrating and annotating graphs by other means.

TL;DR – This was the very first paper on FOON: the functional object-oriented network. Here, we introduced what they are and how they can be used for task planning. They are advantageous for their flexibility and human interpretability.