SkillWrapper: Generative Predicate Invention for Skill Abstraction

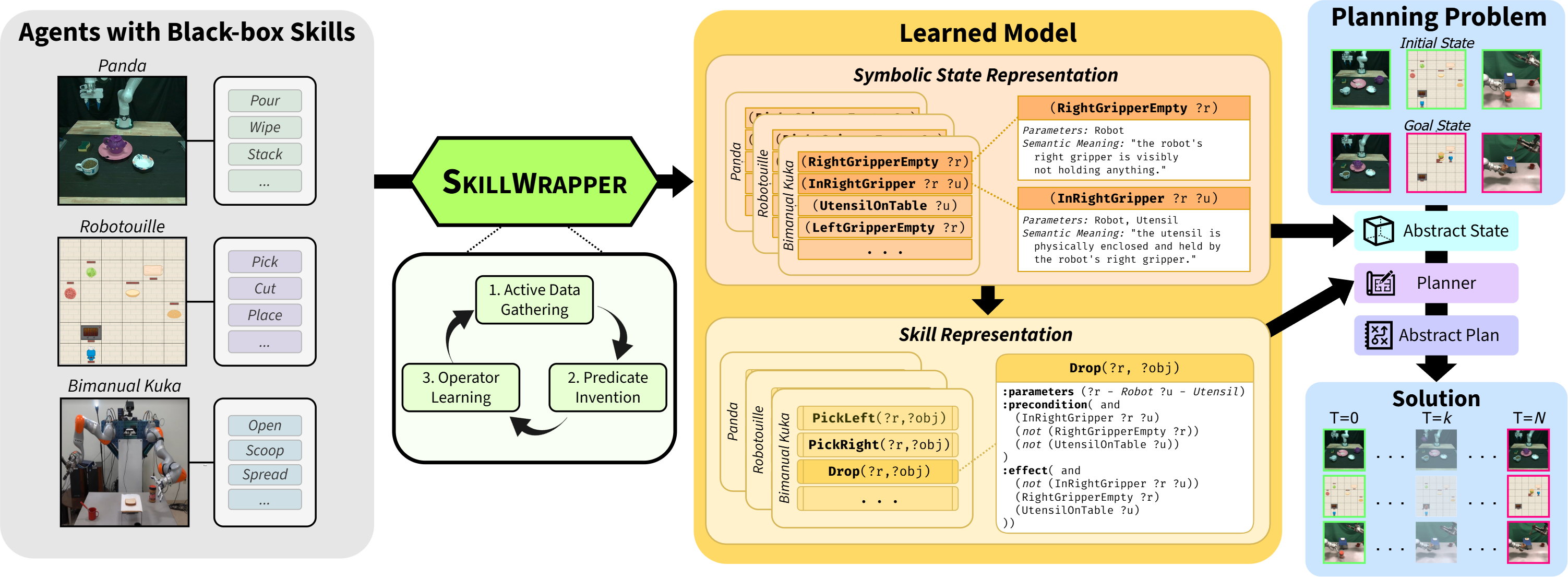

TL;DR – This paper introduces SkillWrapper, a system for creating abstract representations of skills using foundation models.

TL;DR – This paper introduces SkillWrapper, a system for creating abstract representations of skills using foundation models.

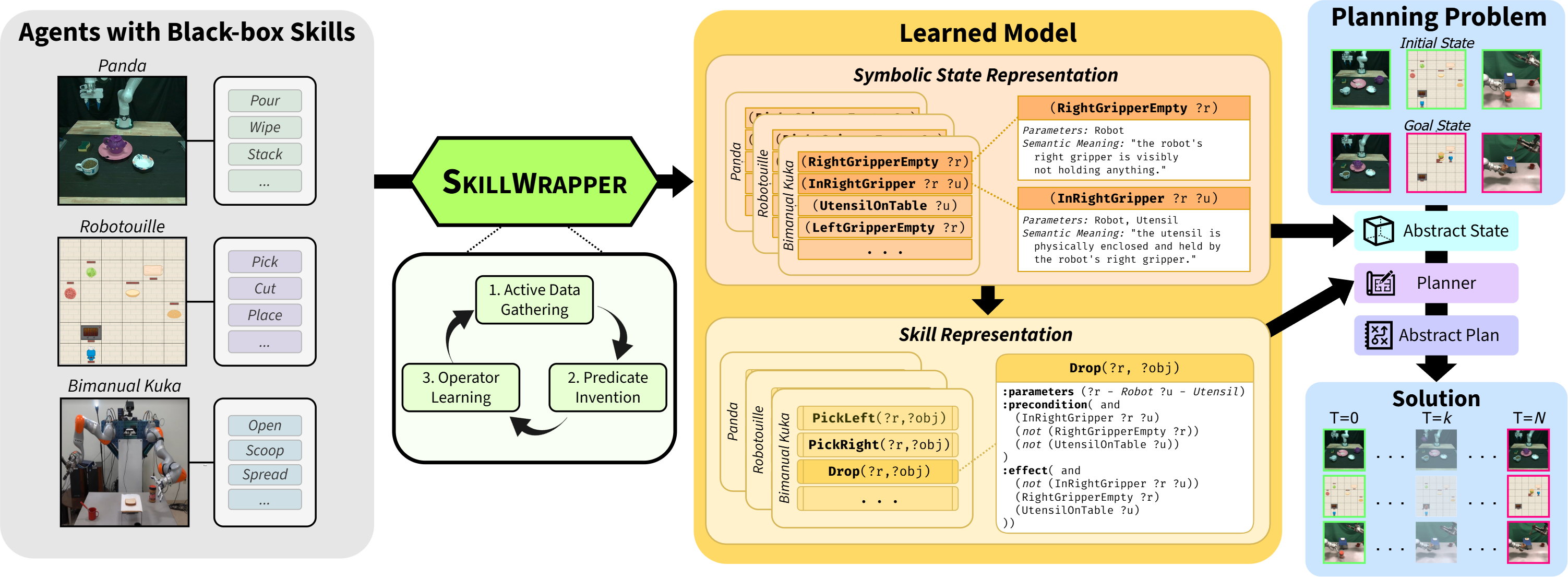

TL;DR – This paper formalizes the concept of object-level planning and discusses how this level of planning naturally integrates with large language models (LLMs).

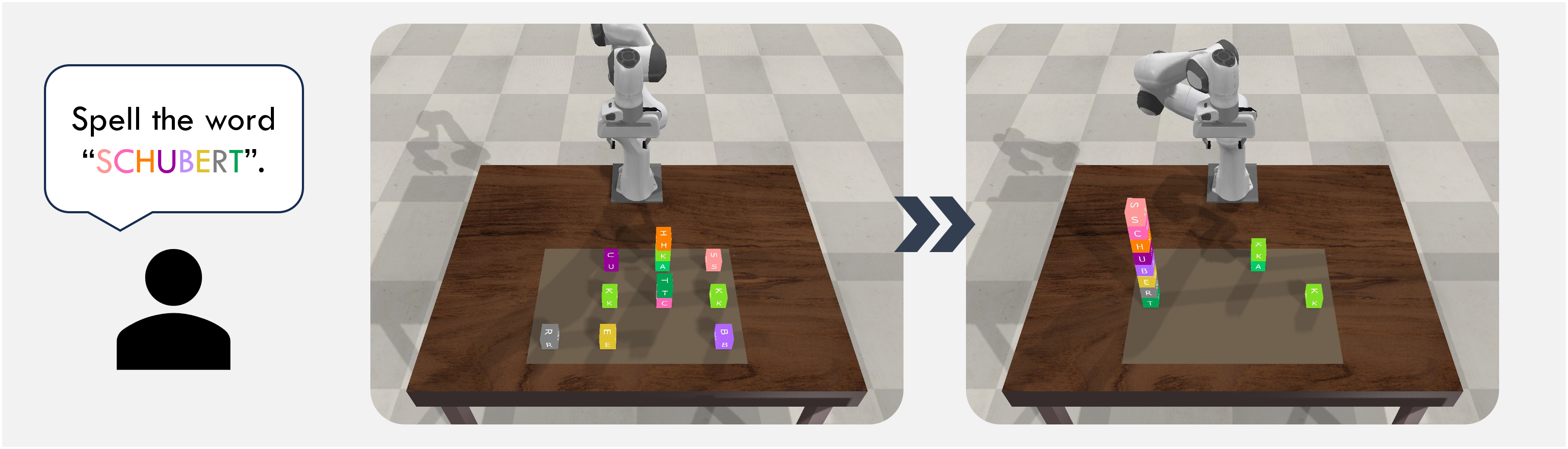

TL;DR – Building on prior work (Lang2LTL - CoRL 2023), this paper introduces a modular system that enables robots to follow natural language commands with spatiotemporal referring expressions. This system leverages multi-modal foundation models as well as linear temporal logic.

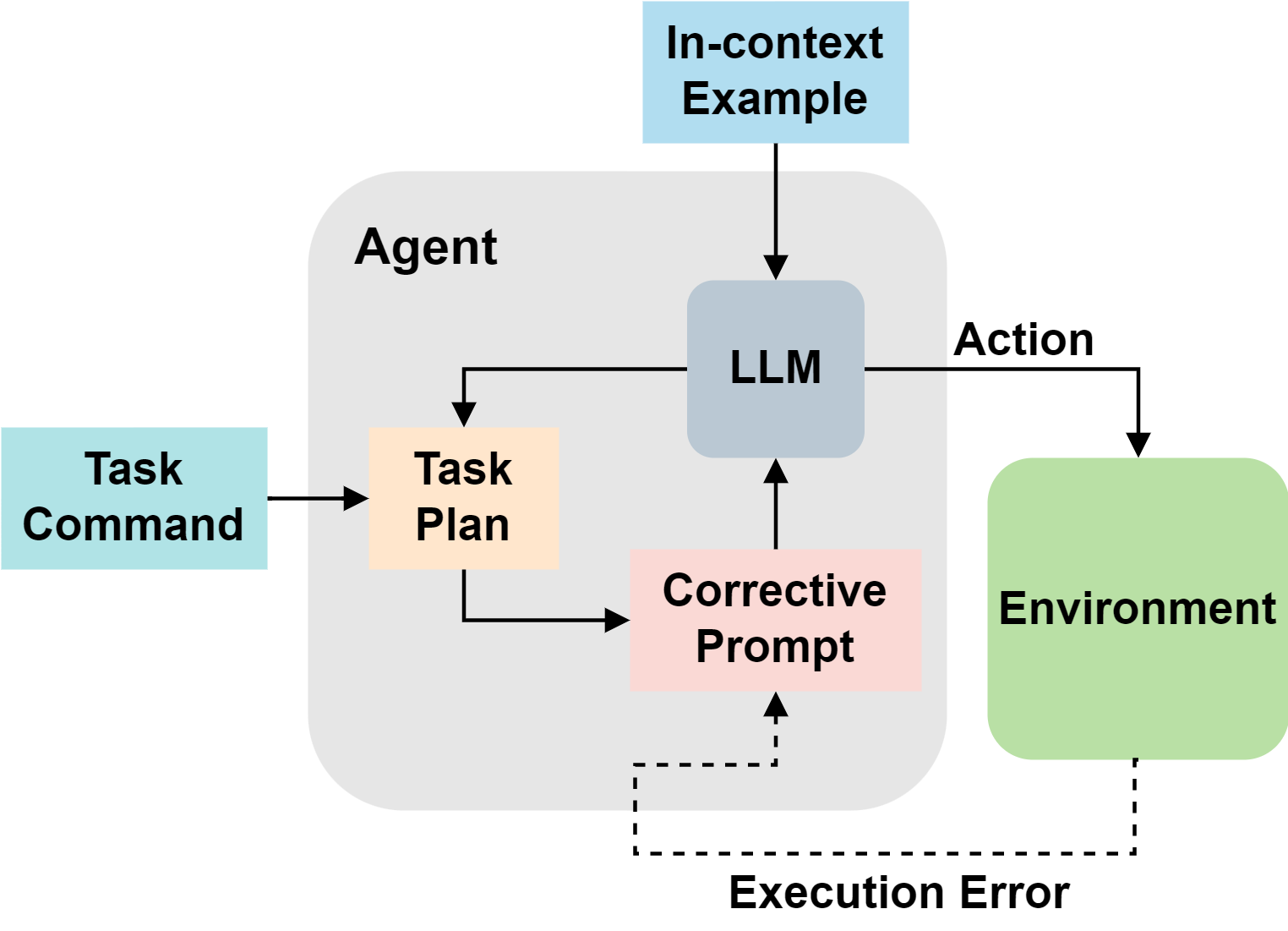

TL;DR – In this paper, we introduce CAPE: an approach to correct errors encountered during robot plan execution. We exploit the ability of large language models to generate high-level plans and to reason about causes of errors.