Developing Motion Code Embedding for Action Recognition in Videos

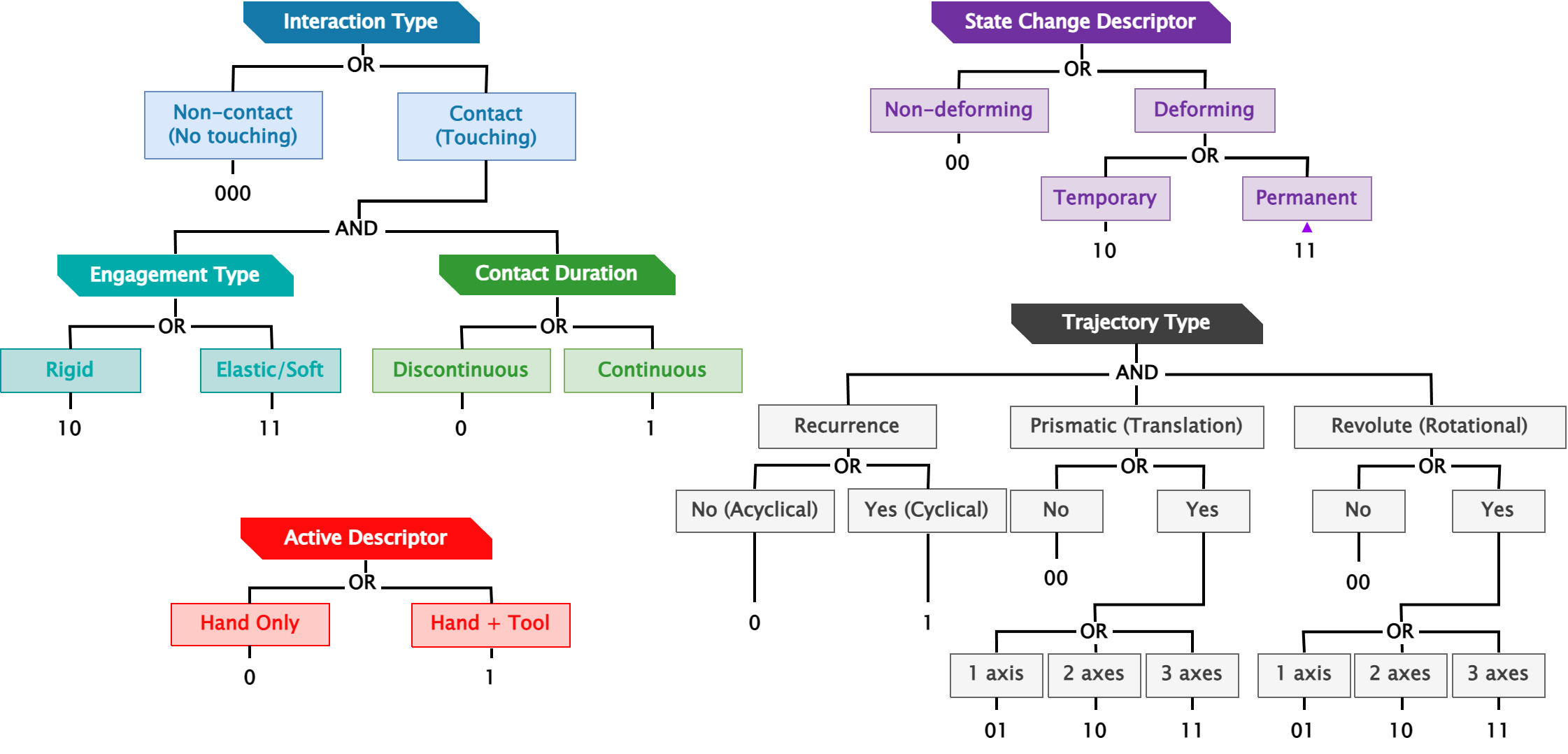

TL;DR – This work uses the features from the motion taxonomy to improve action recognition on egocentric videos from the EPIC-KITCHENS dataset. This is done by integrating motion code detection for action sequences.